Logging in to the Ansible Automation Platform

The default login user for the AAP interface is admin, and the password is randomly generated during installation. This password is required to access the interface.

However, logging into the interface is not necessary, as the pattern automatically configures the AAP instance. The pattern retrieves the password by using the same method as the ansible_get_credentials.sh script (described below).

If you need to inspect the AAP instance or change its configuration, there are two ways to log in. Both methods give access to the same instance using the same password.

Logging in using a secret retrieved from the OpenShift Console

Follow these steps to log in to the Ansible Automation Platform using the OpenShift console:

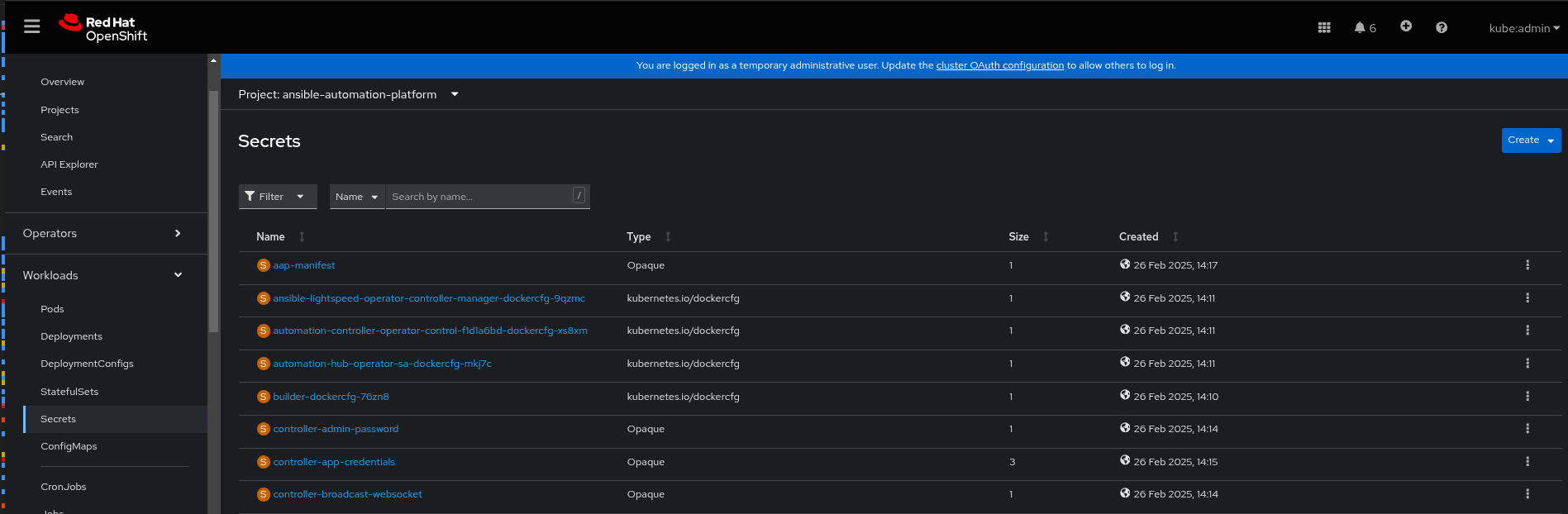

In the OpenShift console, go to Workloads > Secrets and select the

ansible-automation-platformproject if you want to limit the number of secrets you can see. Figure 1. AAP secret

Figure 1. AAP secretSelect the

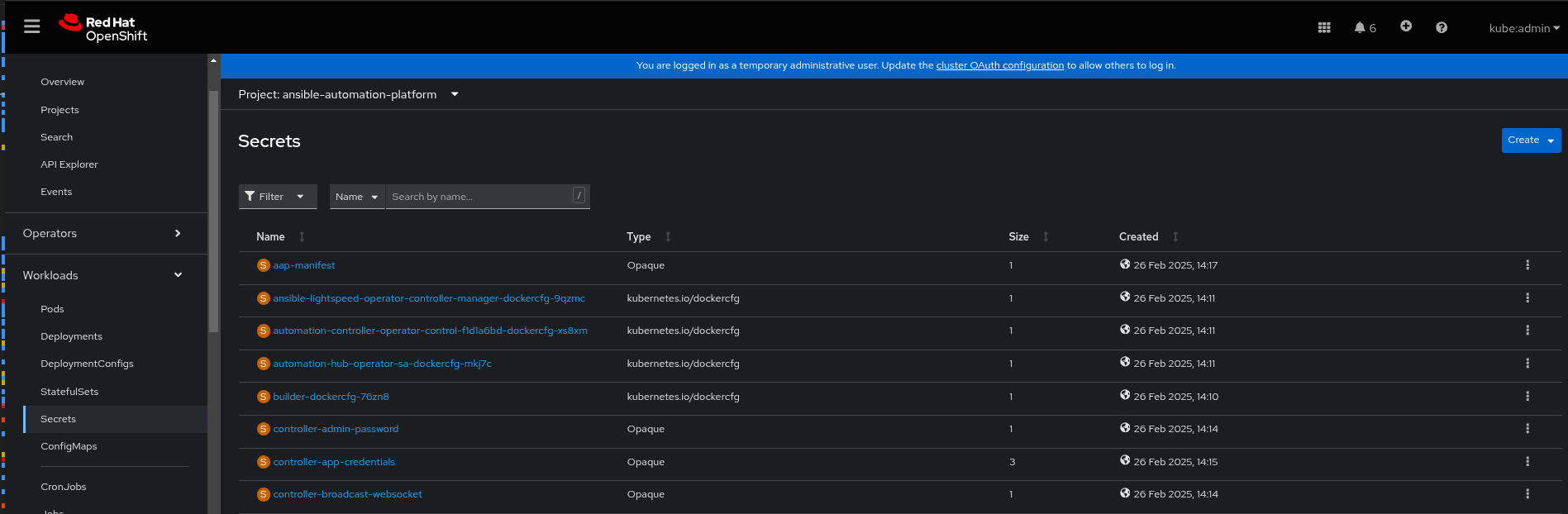

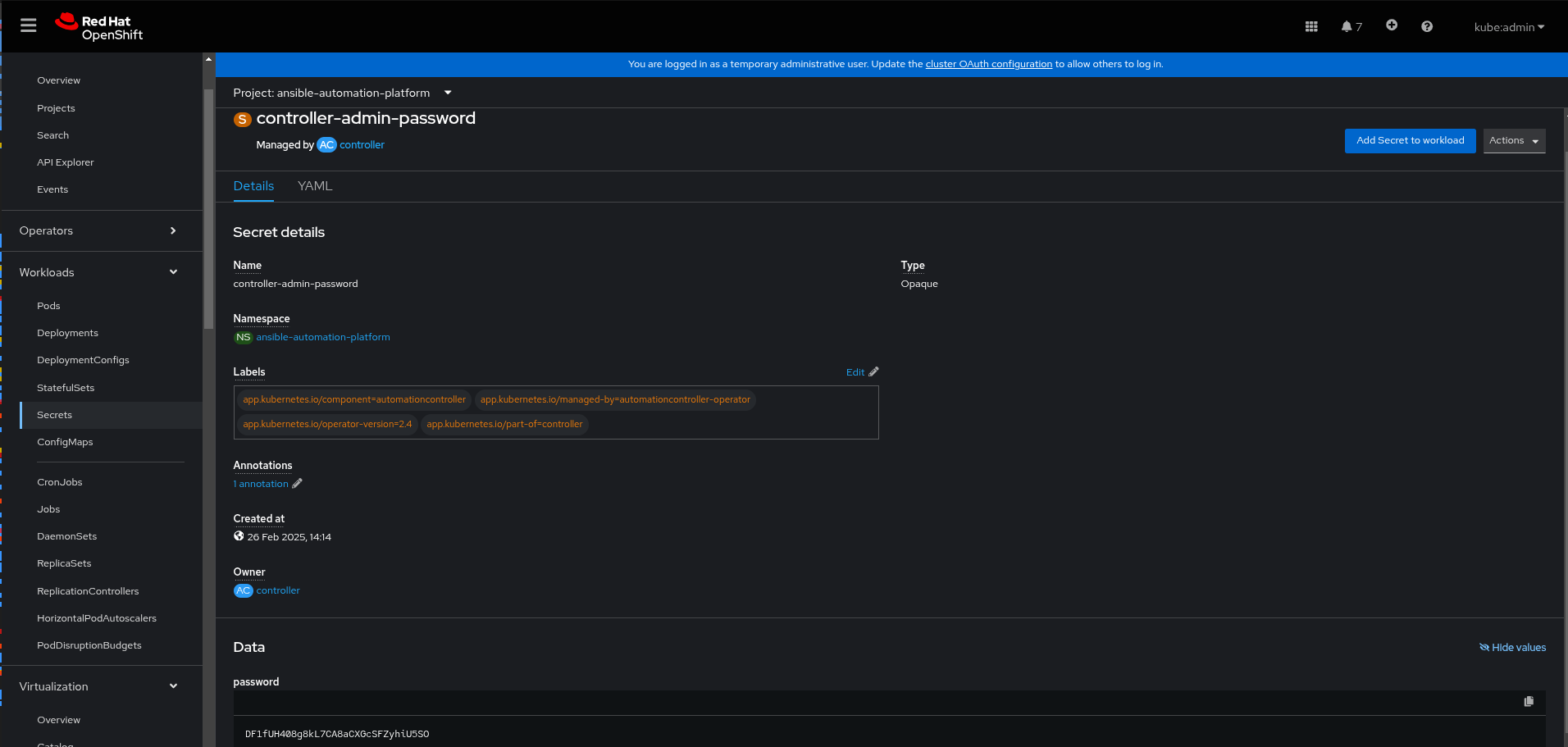

aap-admin-password.In the Data field click Reveal values to display the password.

Figure 2. AAP secret details

Figure 2. AAP secret details

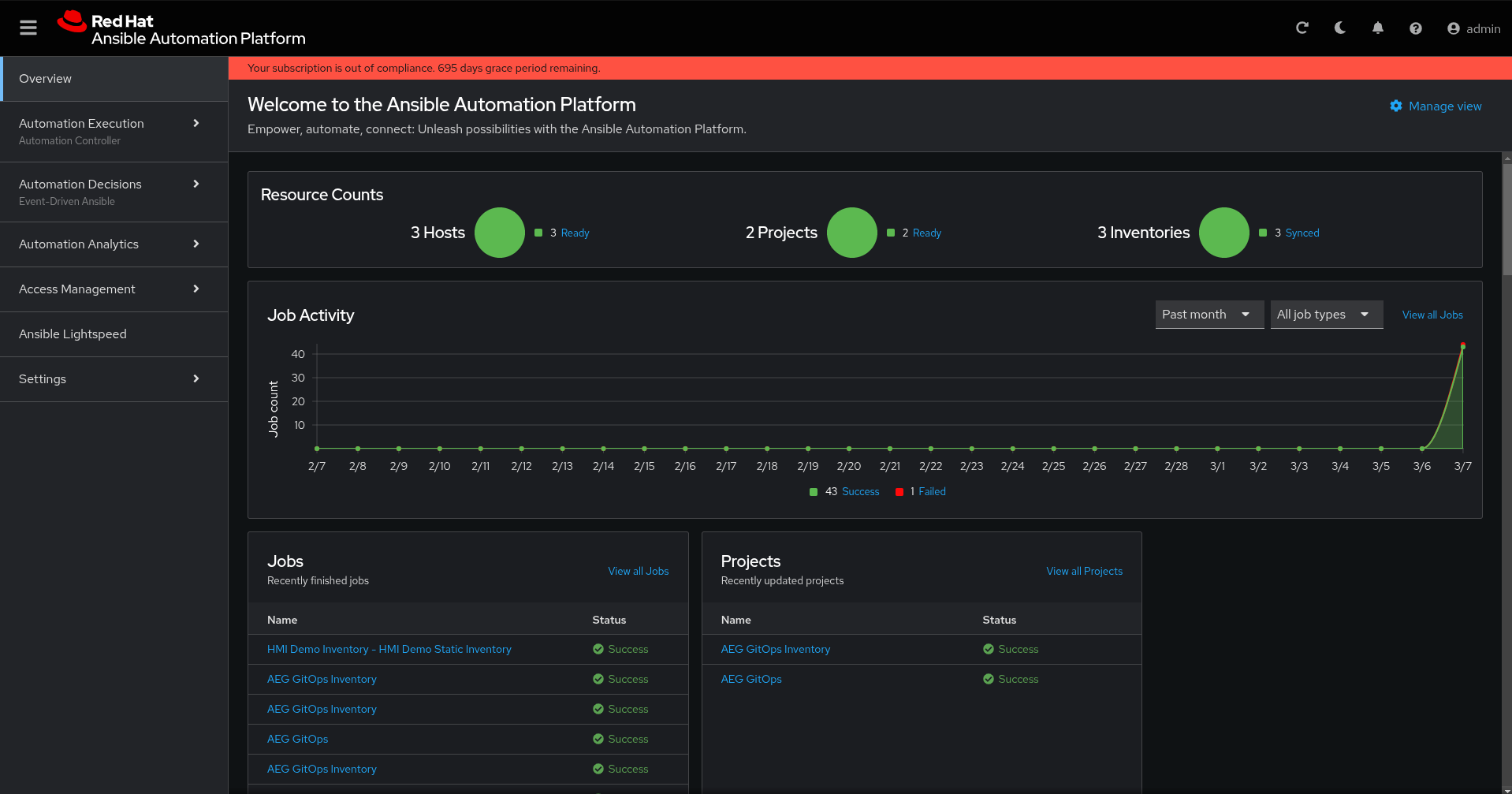

Under Networking > Routes, click the URL for the

aaproute to open the Ansible Automation Platform interface.Log in using the

adminuser and the password you retrieved from theaap-admin-passwordsecret. A screen similar to the following appears: Figure 3. AAP login

Figure 3. AAP login

Logging in using a secret retrieved with ansible_get_credentials.sh

Follow this procedure to log in to the Ansible Automation Platform using the ansible_get_credentials.sh script:

From the top-level pattern directory (ensuring you have set

KUBECONFIG), run the following command:$ ./pattern.sh ./scripts/ansible_get_credentials.shThis script retrieves the URL for your Ansible Automation Platform instance and the password for its

adminuser. The password is auto-generated by the AAP operator by default. The output of the command looks like this (your password will be different):[WARNING]: No inventory was parsed, only implicit localhost is available PLAY [Retrieve Credentials for AAP on OpenShift] ******************************************************************* TASK [Retrieve API hostname for AAP] ******************************************************************* ok: [localhost] TASK [Set ansible_host] ***************************************************************** ok: [localhost] TASK [Retrieve admin password for AAP] ***************************************************************************** ok: [localhost] TASK [Set admin_password fact] **************************************************************************************** ok: [localhost] TASK [Report AAP Endpoint] ***************************************************************************************** ok: [localhost] => { "msg": "AAP Endpoint: https://aap-ansible-automation-platform.apps.kevstestcluster.aws.validatedpatterns.io" } TASK [Report AAP User] ****************************************************************************** ok: [localhost] => { "msg": "AAP Admin User: admin" } TASK [Report AAP Admin Password] ******************************************************************* ok: [localhost] => { "msg": "AAP Admin Password: XoQ2MoU88ibAwUZI8tHu194DP304UEqz" } PLAY RECAP ******************************************************************************* localhost : ok=7 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

Pattern AAP Configuration Details

This section describes the AAP configuration during the pattern installation. All of the configuration discussed in this section is applied by the ansible_load_controller.sh script.

The ansible_load_controller.sh script automates the configuration of the Ansible Automation Platform (AAP) by executing a series of Ansible playbooks. These playbooks perform tasks such as retrieving credentials, parsing secrets, and configuring the AAP instance.

Key components of the configuration process:

Retrieving AAP Credentials: The script runs the

ansible_get_credentials.ymlplaybook to obtain necessary credentials for accessing and managing the AAP instance.Parsing Secrets: It then executes the

parse_secrets_from_values_secret.ymlplaybook to extract and process sensitive information stored in thevalues_secret.yamlfile, which includes passwords, tokens, or other confidential data required for configuration.Configuring the AAP Instance: Finally, the script runs the

ansible_configure_controller.ymlplaybook to set up and configure the AAP controller based on the retrieved credentials and parsed secrets.