Intel AMX accelerated Multicloud GitOps with Openshift AI

Validation status:

Links:

About the Intel AMX accelerated Multicloud GitOps pattern with Openshift AI

- Use case

Use a GitOps approach to manage hybrid and multi-cloud deployments across both public and private clouds.

Enable cross-cluster governance and application lifecycle management.

Accelerate AI operations and improve computational performance by using Intel Advanced Matrix Extensions together with Openshift AI operator.

Securely manage secrets across the deployment.

Based on the requirements of a specific implementation, certain details might differ. However, all validated patterns that are based on a portfolio architecture, generalize one or more successful deployments of a use case.

- Background

Organizations are aiming to develop, deploy, and operate applications on an open hybrid cloud in a stable, simple, and secure way. This hybrid strategy includes multi-cloud deployments where workloads might be running on multiple clusters and on multiple clouds, private or public. This strategy requires an infrastructure-as-code approach: GitOps. GitOps uses Git repositories as a single source of truth to deliver infrastructure-as-code. Submitted code checks the continuous integration (CI) process, while the continuous delivery (CD) process checks and applies requirements for things like security, infrastructure-as-code, or any other boundaries set for the application framework. All changes to code are tracked, making updates easy while also providing version control should a rollback be needed. Moreover, organizations are looking for solutions that increase efficiency and at the same time reduce costs, what is possible using 5th Generation Intel Xeon Scalable Processors with a new build-in accelerator - Intel Advanced Matrix Extensions.

About the solution

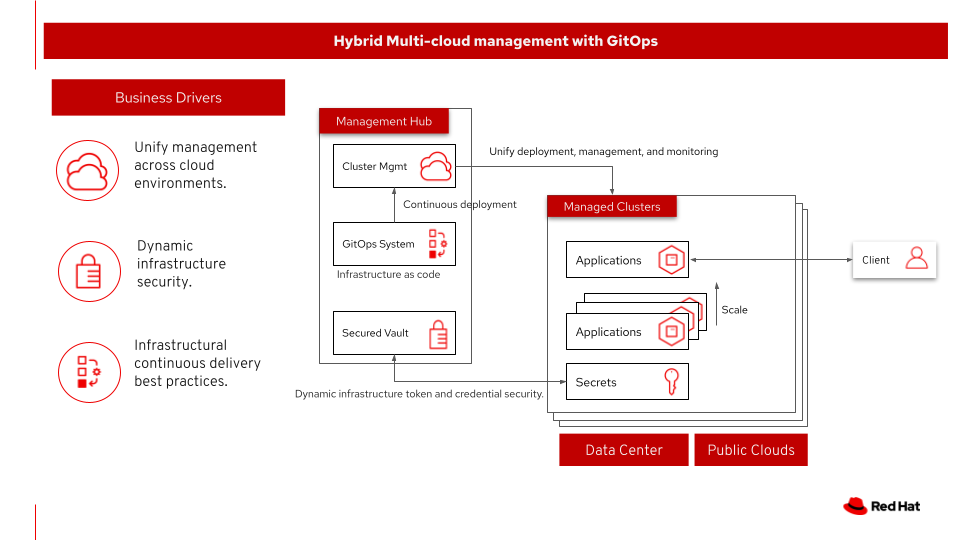

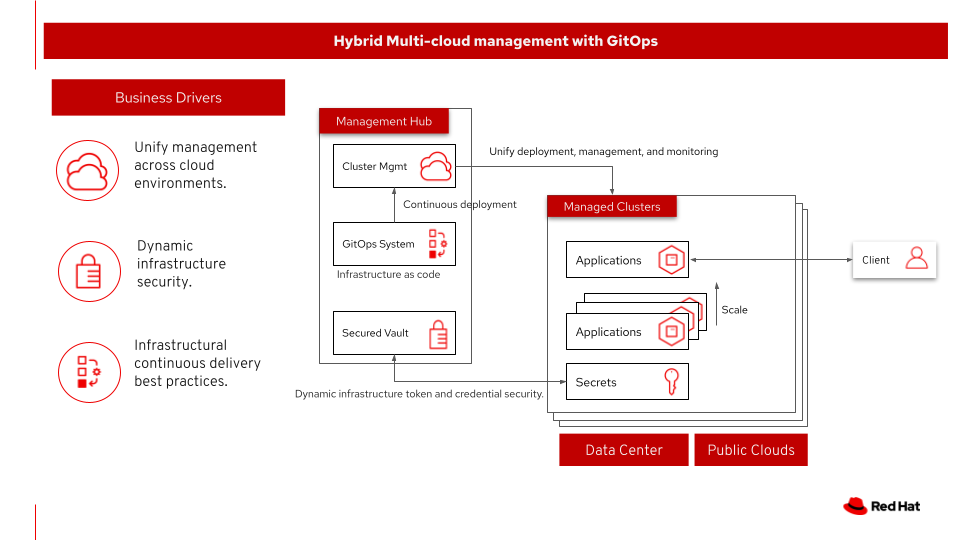

This architecture covers hybrid and multi-cloud management with GitOps as shown in following figure. At a high level this requires a management hub, for DevOps and GitOps, and infrastructure that extends to one or more managed clusters running on private or public clouds. The automated infrastructure-as-code approach can manage the versioning of components and deploy according to the infrastructure-as-code configuration.

Benefits of Hybrid Multicloud management with GitOps:

Unify management across cloud environments.

Dynamic infrastructure security.

Infrastructural continuous delivery best practices.

Figure 1. Overview of the solution including the business drivers, management hub, and the clusters under management

About the technology

The following technologies are used in this solution:

- Red Hat OpenShift Platform

An enterprise-ready Kubernetes container platform built for an open hybrid cloud strategy. It provides a consistent application platform to manage hybrid cloud, public cloud, and edge deployments. It delivers a complete application platform for both traditional and cloud-native applications, allowing them to run anywhere. OpenShift has a pre-configured, pre-installed, and self-updating monitoring stack that provides monitoring for core platform components. It also enables the use of external secret management systems, for example, HashiCorp Vault in this case, to securely add secrets into the OpenShift platform.

- Red Hat OpenShift GitOps

A declarative application continuous delivery tool for Kubernetes based on the ArgoCD project. Application definitions, configurations, and environments are declarative and version controlled in Git. It can automatically push the desired application state into a cluster, quickly find out if the application state is in sync with the desired state, and manage applications in multi-cluster environments.

- Red Hat Advanced Cluster Management for Kubernetes

Controls clusters and applications from a single console, with built-in security policies. Extends the value of Red Hat OpenShift by deploying apps, managing multiple clusters, and enforcing policies across multiple clusters at scale.

- Red Hat Ansible Automation Platform

Provides an enterprise framework for building and operating IT automation at scale across hybrid clouds including edge deployments. It enables users across an organization to create, share, and manage automation, from development and operations to security and network teams.

- Node Feature Discovery Operator

Manages the detection of hardware features and configuration in an OpenShift Container Platform cluster by labeling the nodes with hardware-specific information. NFD labels the host with node-specific attributes, such as PCI cards, kernel, operating system version, and so on.

- Red Hat Openshift AI

A flexible, scalable MLOps platform with tools to build, deploy, and manage AI-enabled applications. OpenShift AI (previously called Red Hat OpenShift Data Science) supports the full lifecycle of AI/ML experiments and models, on-premise and in the public cloud.

- OpenVINO Toolkit Operator

The Operator includes OpenVINO™ Notebooks for development of AI optimized workloads and OpenVINO™ Model Server for deployment that enables AI inference execution at scale, and exposes AI models via gRPC and REST API interfaces.

- Intel® Advanced Matrix Extensions

A new built-in accelerator that improves the performance of deep-learning training and inference on the CPU and is ideal for workloads like natural-language processing, recommendation systems and image recognition.

- Hashicorp Vault

Provides a secure centralized store for dynamic infrastructure and applications across clusters, including over low-trust networks between clouds and data centers.

This solution also uses a variety of observability tools including the Prometheus monitoring and Grafana dashboard that are integrated with OpenShift as well as components of the Observatorium meta-project which includes Thanos and the Loki API.

Intel AMX accelerated Multicloud GitOps pattern with Openshift AI

The basic Multicloud GitOps pattern has been extended to highlight the 5th Generation Intel Xeon Scalable Processors capabilities, offering developers a streamlined pathway to accelerate their workloads through the integration of cutting-edge Intel AMX, providing efficiency and performance optimization in AI workloads.

The basic pattern has been extended with two components: Openshift AI and OpenVINO Toolkit Operator.

Openshift AI, serves as a robust AI/ML platform for the creation of AI-driven applications and provides a collaborative environment for data scientists and developers that helps to move easily from experiment to production. It offers Jupyter application with selection of notebook servers, equipped with pre-configured environments and necessary support and optimizations (such as CUDA, PyTorch, Tensorflow, HabanaAI, etc.).

OpenVINO Toolkit Operator manages OpenVINO components within Openshift environment. First one, OpenVINO™ Model Server (OVMS) is a scalable, high-performance solution for serving machine learning models optimized for Intel® architectures. The other component, that was used in the proposed pattern is Notebook resource. This element integrates Jupyter from OpenShift AI with a container image that includes developer tools from the OpenVINO toolkit. It also enables selecting a defined image OpenVINO™ Toolkit from the Jupyter Spawner choice list.

BERT-Large model is used as an example of AI workload using Intel AMX in the pattern. The BERT-Large inference is running in the Jupyter Notebook that uses OpenVINO optimizations.

As a side note, BERT_Large is a wide known model used by various enterprise Natural Language Processing workloads. Intel has demonstrated, that 5th Generation Intel Xeon Scalable Processors perform up to 1.49 times better in NLP flows on Red Hat OpenShift vs. prior generation of processors- read more: Level Up Your NLP applications on Red Hat OpenShift and 5th Gen

Next steps

Deploy the management hub using Helm.

Add a managed cluster to deploy the managed cluster piece using ACM.