$ git clone git@github.com:your-username/ramendr-starter-kit.gitDeploying the RamenDR Starter Kit Pattern

An OpenShift cluster

To create an OpenShift cluster, go to the Red Hat Hybrid Cloud console.

Select OpenShift -> Red Hat OpenShift Container Platform -> Create cluster.

A GitHub account with a personal access token that has repository read and write permissions.

The Helm binary, for instructions, see Installing Helm

Additional installation tool dependencies. For details, see Patterns quick start.

It is desirable to have a cluster for deploying the GitOps management hub assets and a separate cluster(s) for the managed cluster(s).

Preparing for deployment

Fork the ramendr-starter-kit repository on GitHub. You must fork the repository because your fork is updated as part of the GitOps and DevOps processes.

Clone the forked copy of this repository.

Go to your repository: Ensure you are in the root directory of your Git repository by using:

$ cd /path/to/your/repositoryRun the following command to set the upstream repository:

$ git remote add -f upstream git@github.com:validatedpatterns/ramendr-starter-kit.gitVerify the setup of your remote repositories by running the following command:

$ git remote -vExample outputorigin git@github.com:kquinn1204/ramendr-starter-kit.git (fetch) origin git@github.com:kquinn1204/ramendr-starter-kit.git (push) upstream git@github.com:validatedpatterns/ramendr-starter-kit.git (fetch) upstream git@github.com:validatedpatterns/ramendr-starter-kit.git (push)Make a local copy of secrets template outside of your repository to hold credentials for the pattern.

Do not add, commit, or push this file to your repository. Doing so may expose personal credentials to GitHub.

Run the following commands:

$ cp values-secret.yaml.template ~/values-secret.yamlPopulate this file with secrets, or credentials, that are needed to deploy the pattern successfully:

$ vi ~/values-secret.yamlEdit the

vm-sshsection to include the username, private key, and public key. To ensure the seamless flow of the pattern, the value associated with theprivatekeyandpublickeyhas been updated withpath. For example:- name: vm-ssh vaultPrefixes: - global fields: - name: username value: 'cloud-user' - name: privatekey path: '/path/to/private-ssh-key' - name: publickey path: '/path/to/public-ssh-key'Paste the path to your locally stored private and public keys. If you do not have a key pair, generate one using

ssh-keygen.Edit the

cloud-initsection to include theuserDatablock to use with cloud-init. For example:- name: cloud-init vaultPrefixes: - global fields: - name: userData value: |- #cloud-config user: 'cloud-user' password: 'cloud-user' chpasswd: { expire: False }Edit the

awssection to refer to the file containing your AWS credentials:- name: aws fields: - name: aws_access_key_id ini_file: ~/.aws/credentials ini_key: aws_access_key_id - name: aws_secret_access_key ini_file: ~/.aws/credentials ini_key: aws_secret_access_key - name: baseDomain value: aws.example.com - name: pullSecret path: ~/pull_secret.json - name: ssh-privatekey path: ~/.ssh/privatekey - name: ssh-publickey path: ~/.ssh/publickeyEdit the

openshiftPullSecretsection to refer to the file containing your OpenShift pull secret:- name: openshiftPullSecret fields: - name: .dockerconfigjson path: ~/pull_secret.json

Create and switch to a new branch named

my-branch, by running the following command:$ git checkout -b my-branchThe pattern will infer the baseDomain of your cluster based on the clusterDomain which is tracked by the pattern operator. Previously, this required the pattern to be forked to be useful - but this is no longer the case (you may still wish to change other settings in the RDR chart’s values file, such as

aws.regionsettings. This file is at hub/rdr/values.yaml. If you do make customizations to this or other files, it is necessary to fork the pattern so that the changes will be seen by ArgoCD. If you made any changes to this or any other files tracked by git, git add them and then commit the changes by running the following command:$ git commit -m "any updates"Push the changes to your forked repository:

$ git push origin my-branch

The preferred way to install this pattern is by using the script ./pattern.sh script.

Deploying the pattern by using the pattern.sh file

To deploy the pattern by using the pattern.sh file, complete the following steps:

Log in to your cluster by following this procedure:

Obtain an API token by visiting https://oauth-openshift.apps.<your-cluster>.<domain>/oauth/token/request.

Log in to the cluster by running the following command:

$ oc login --token=<retrieved-token> --server=https://api.<your-cluster>.<domain>:6443Or log in by running the following command:

$ export KUBECONFIG=~/<path_to_kubeconfig>

Deploy the pattern to your cluster. Run the following command:

$ ./pattern.sh make install

Verify that the Operators have been installed on the hub cluster. Navigate to Operators → Installed Operators page in the OpenShift Container Platform web console on the Hub cluster (in the "local-cluster" view),

Figure 1. RamenDR Hub Operators

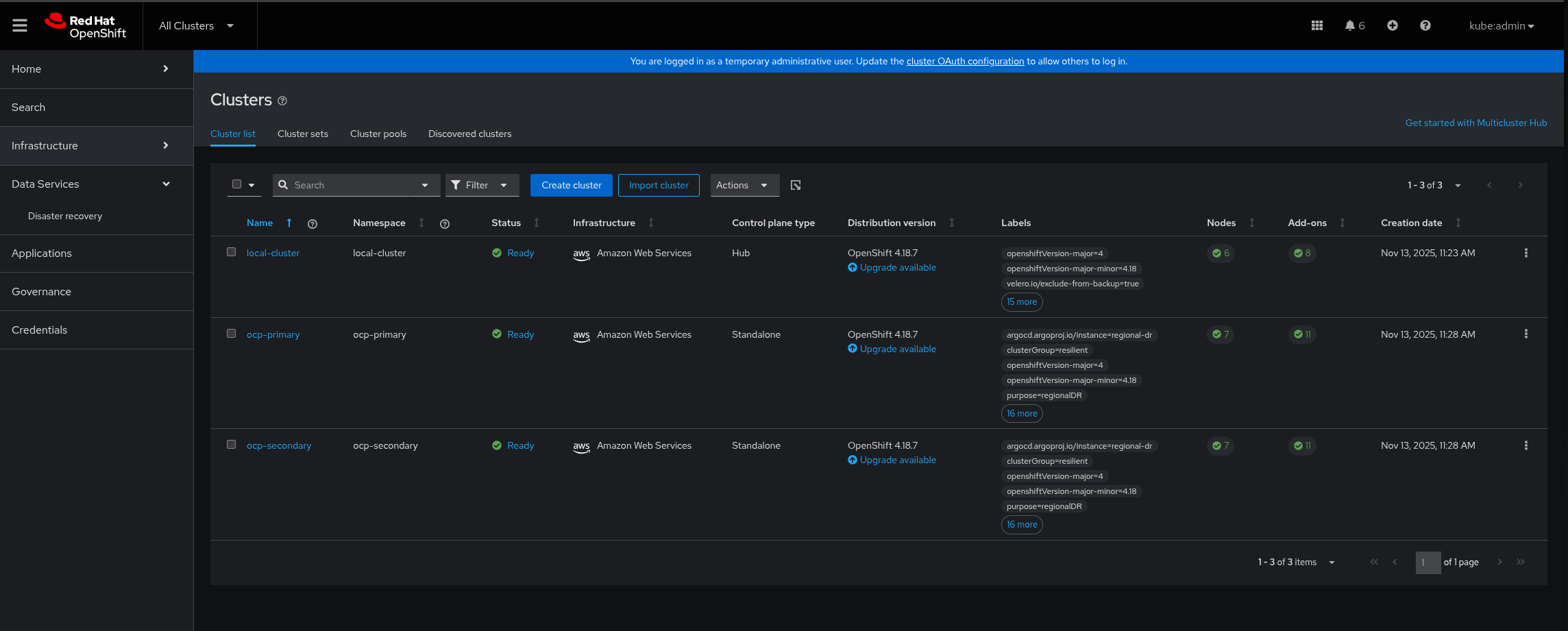

Figure 1. RamenDR Hub OperatorsVerify that the primary and secondary managed clusters have been built. This can take close to an hour on AWS. On the hub cluster, navigate to All Clusters in the OpenShift Container Plaform web console:

Figure 2. RamenDR Clusters

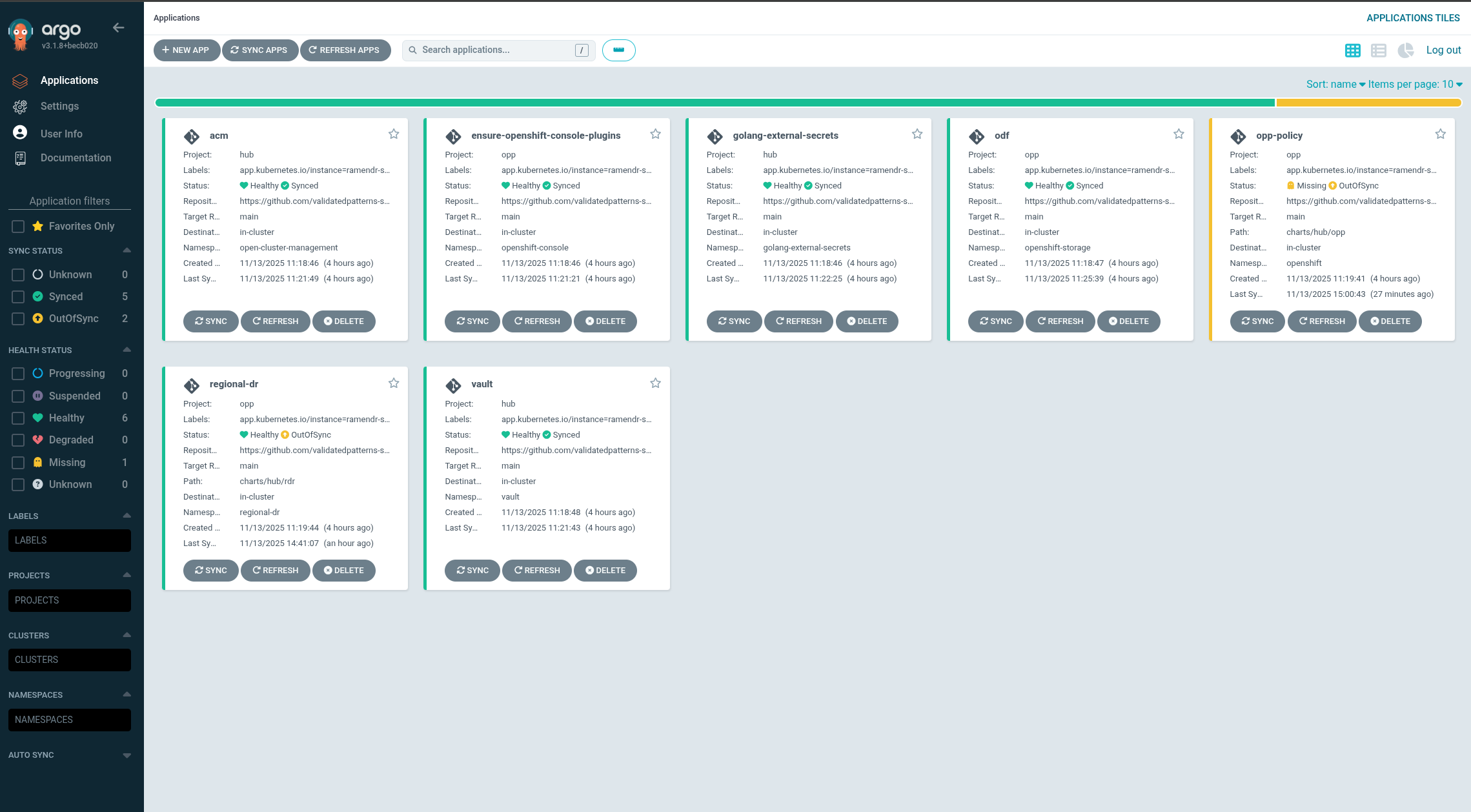

Figure 2. RamenDR ClustersWait some time for everything to deploy to all the clusters. It might take up to another hour from when the managed clusters finish building. You can track the progress through the

Hub ArgoCDUI from the nines menu, especially the "opp-policy" and the "regional-dr" applications. Most of the critical resources are in the regional-dr application (at present, the opp-policy app may show missing/out-of-sync, and the regional-dr app may show OutOfSync - even when both are healthy. We are working on a fix, track bug progress here): Figure 3. RamenDR Starter Kit Applications

Figure 3. RamenDR Starter Kit ApplicationsEventually, the Virtual Machines will be deployed and the Disaster Recovery Placement Control (DRPC) will show that resources are now protected. This screen can be reached via All Clusters → Data Services → Disaster Recovery → Protected Applications on the hub cluster. Normally it will be faster to synchronize Kubernetes objects than Application volumes. When these indicators both show Healthy it is safe to trigger a failover:

Figure 4. RamenDR Starter Kit Applications

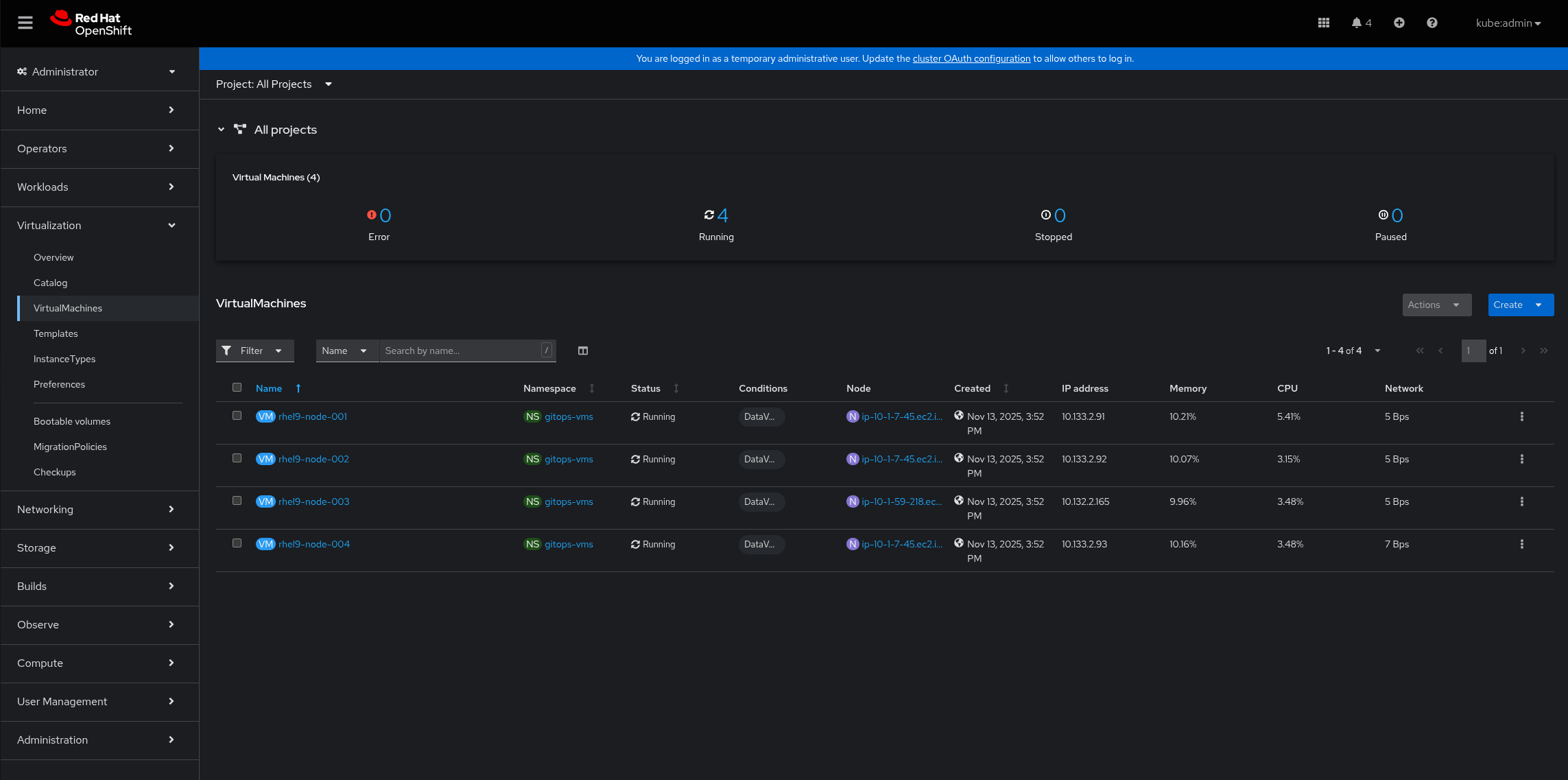

Figure 4. RamenDR Starter Kit ApplicationsYou might want to see the VMs themselves running. They will be on the primary cluster in the Virtualization → VirtualMachines area. The pattern configures 4 RHEL9 VMs by default:

Figure 5. RamenDR Starter Kit Trigger Failover, part 1

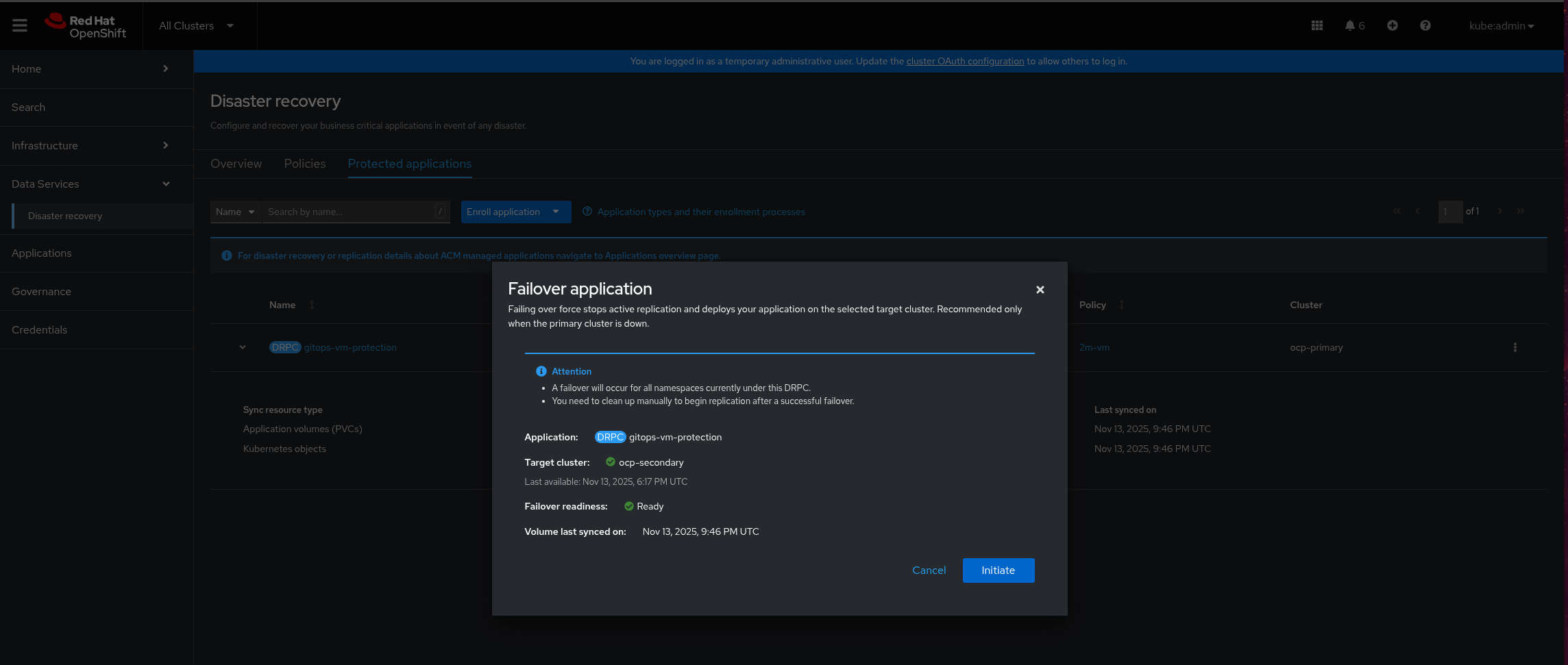

Figure 5. RamenDR Starter Kit Trigger Failover, part 1Clicking the "Failover" option will bring up a modal dialog that indicates where the failover will move the workload, and when the last known good state of the workload is. Click on the "Initiate" button to begin the failover:

Figure 6. RamenDR Starter Kit Trigger Failover, part 2

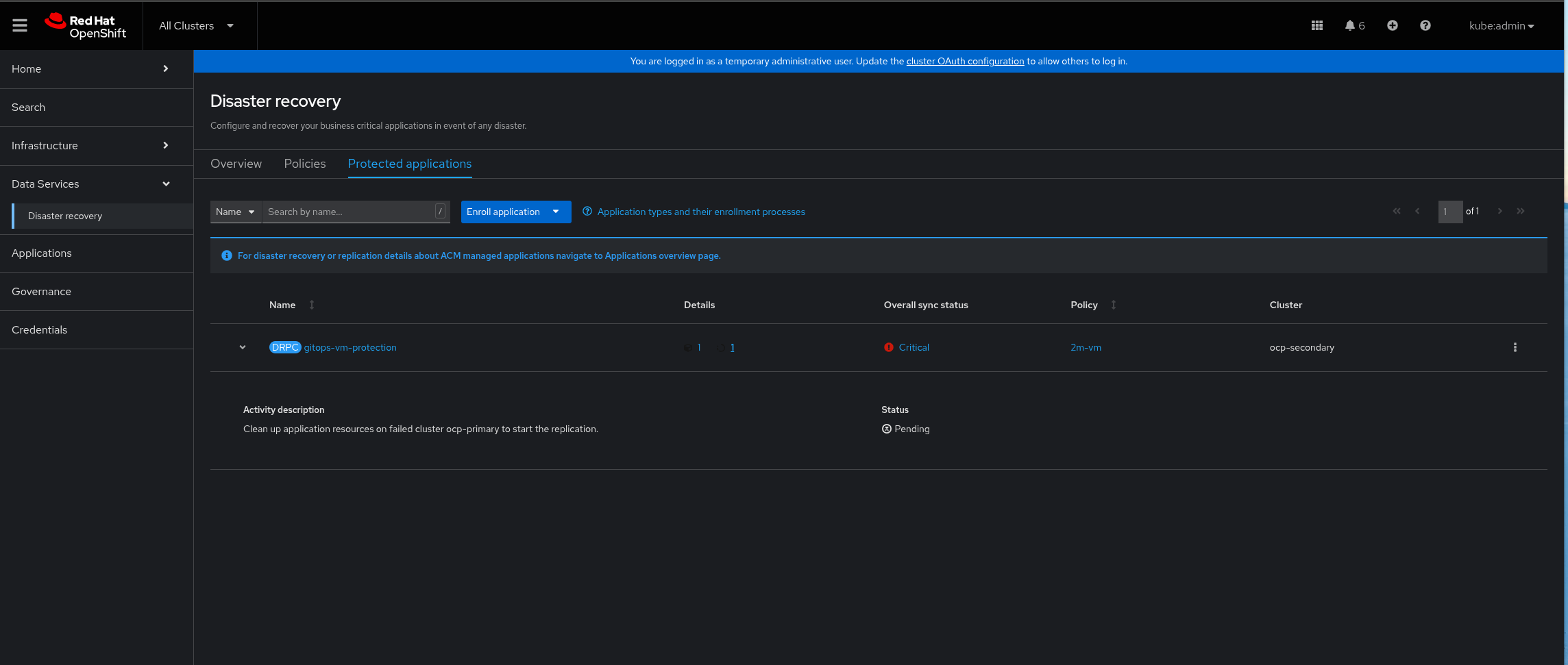

Figure 6. RamenDR Starter Kit Trigger Failover, part 2While the failover is happening, you can watch the progress of it in the activity area. When it is done, it will say (with a discovered application) that it is necessary to clean up application resources to allow replication to start in the other direction. Notice that the primary cluster should have changed:

Figure 7. RamenDR Starter Kit Failover Cleanup

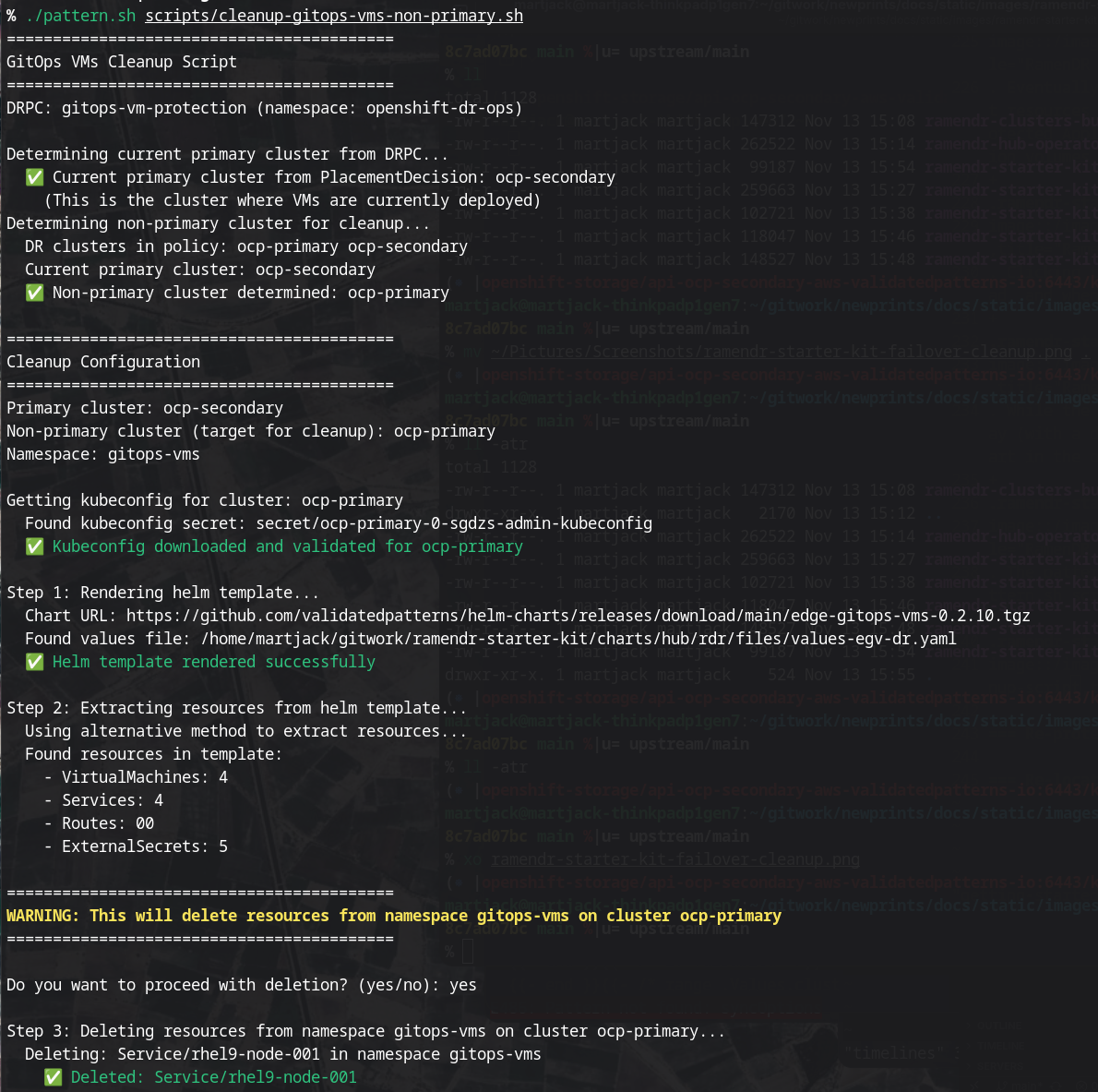

Figure 7. RamenDR Starter Kit Failover CleanupThe pattern provides a script to do this cleanup. Invoke it with your Hub cluster KUBECONFIG set and running

./pattern.sh scripts/cleanup-gitops-vms-non-primary.sh: Figure 8. RamenDR Starter Kit Failover Cleanup

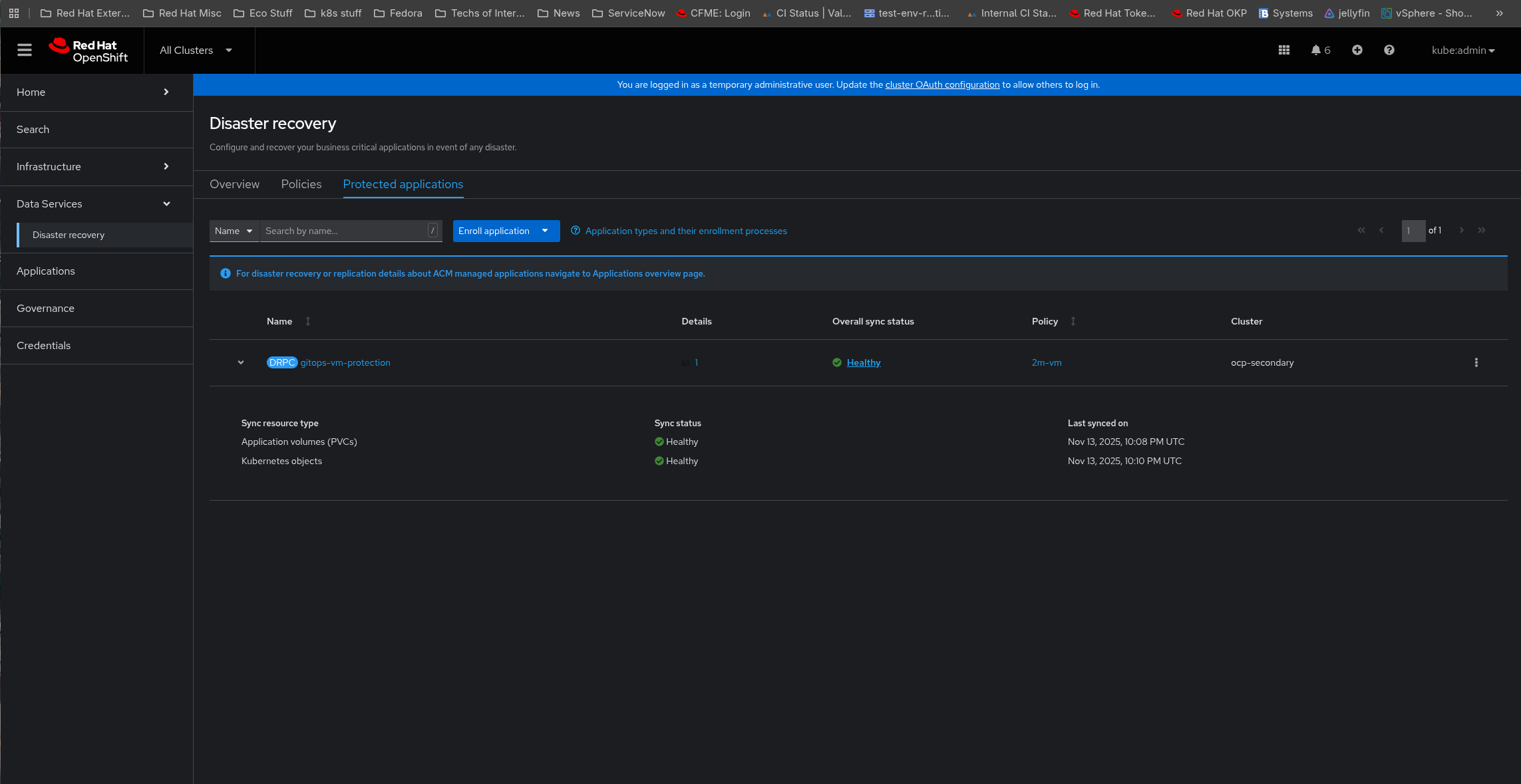

Figure 8. RamenDR Starter Kit Failover CleanupAfter a few minutes, the resources should show healthy and protected again (the PVCs take a few minutes to synchronize):

Figure 9. RamenDR Starter Kit Reprotected

Figure 9. RamenDR Starter Kit Reprotected